AI Agents as Eager Interns

When I think about how we should approach Agentic AI, I often come back to the analogy of mentoring a new intern. The comparison may sound lighthearted, but it holds remarkably well. An intern arrives full of potential and enthusiasm, yet without much experience in how things actually get done. Agentic AI systems are much the same. They show immense promise, but they still need structure, supervision, and a good deal of human judgment to bring that promise to life.

When you first start working with an AI agent, the rule of thumb is simple: begin small. Assign tasks that are well-defined and easy to verify. Make sure the human is firmly in the loop. Every output should be reviewed, every conclusion tested. It might feel painstaking at first, but this is where you are laying key groundwork. The AI is not learning in the way a person does, but you are learning how to achieve the best results and crucially, what it can and cannot be trusted to do.

Over time as the agentic system is improved, through better prompting, data and guardrails, the dynamic begins to shift. The AI will become increasingly reliable on familiar and repetitive work. You can start to step back, allowing it to handle those tasks without constant supervision. That is not a moment for celebration so much as a sign of progress. The real test comes when you begin introducing new and more complex work. This is where the human-in-the-loop returns to the foreground, providing the careful oversight that ensures the AI's confidence does not outrun its competence.

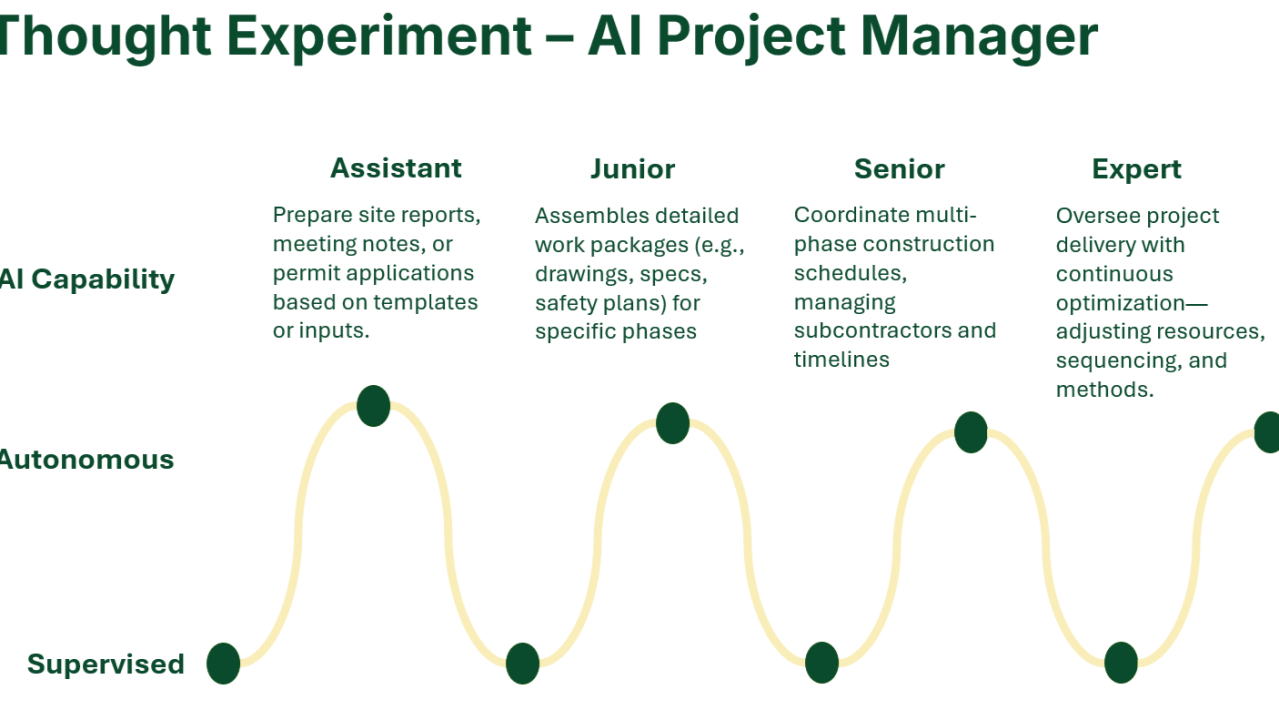

A good example comes from construction project management. A junior project manager begins with the basics: updating schedules, tracking materials, and logging daily site reports. Every item is reviewed by a senior manager, and mistakes are caught early. As their experience grows, they take on more responsibility, perhaps coordinating subcontractors or handling routine change requests while still checking in for approval. Eventually, they are trusted to manage whole phases of the project on their own, while senior staff step in only when complex design issues or major decisions arise. Agentic AI follows the same developmental arc. The more familiar the terrain, the more autonomy it earns, yet it still relies on expert oversight when the work moves into new territory.

I find it useful to think of this development as a cycle rather than a straight line.

- Start with simple, repeatable processes.

- Keep human review as the default.

- Gradually loosen supervision where performance is proven.

- Introduce more complex work, and return to closer oversight.

The process repeats, but each loop brings more maturity and better collaboration. The intern who once needed constant guidance becomes capable of handling whole categories of work independently. The same progression can happen with AI, provided we manage the relationship thoughtfully.

Ultimately, the goal is to create a partnership in which human expertise and machine efficiency reinforce each other. When we treat Agentic AI as an intern rather than an oracle, we give it the opportunity to grow into a trustworthy colleague, one capable of amplifying our work without ever losing the grounding of human judgment.